Advanced analytics at scale optimize asset management

Every process manufacturing facility in operation is comprised of critical equipment and components that must run smoothly to ensure reliable production, and to minimize failures. Particularly as infrastructure ages, commodity prices fluctuate and pressures to decrease greenhouse gas emissions and operating expenditures (OPEX) mount, it is critical for manufacturers to adopt robust maintenance plans to keep equipment in excellent working order.

Effective strategies can be built by leveraging insight-driven, self-service analytics applied to an oft-underused resource — data. The ideal source data for developing these strategies include sensor-produced process data, mechanical asset data, historical work order information, equipment design specifications and subject matter expertise. Weaving all this information together is difficult, especially at an enterprise scale, but it becomes much more feasible with the right software tools.

Inefficient maintenance strategies

In many facilities and companies, process data is siloed in standalone process historians, or in control subsystems scattered throughout the enterprise. Adding to the clutter, equipment specifications often reside on hard copy drawings — not available electronically — work order data in a computerized maintenance management system and mechanical diagnostic data in a separate repository or a vendor’s database. The first step for performing equipment analysis is to unify the information from these disparate sources into a central environment.

Once the data is consolidated — which was traditionally done by exporting raw data from each source and appending it in a single spreadsheet — applying calculations and analytics can be cumbersome if the data is not properly structured, or timestamps are not aligned. This typically leads to time-consuming Extract, Transform, Load (ETL) data cleansing procedures and preparation steps before meaningful context can be extracted from the data.

Even after completing this process for one machine or cell area of the facility, the manual workflow must be repeated for all other equipment, consuming valuable process expert, data scientist and operator time. For this and other reasons, some facilities employ a run-to-failure maintenance philosophy, a common practice in the absence of consolidated data, and especially insights. However, this method runs the continual risk of unplanned downtime and unexpected maintenance costs. It also increases process safety risks for equipment and its human operators.

To mitigate several pitfalls of running to failure, other manufacturers implement scheduled preventive maintenance (PM), a strategy that aims to drastically reduce failures and maintain equipment quality by taking it offline for service at regular intervals, regardless of its condition. While scheduled PM is a better approach than run-to-failure, it is far from foolproof.

Scheduled PM becomes particularly challenging in large enterprise settings because of the volume of equipment to maintain. To keep it cost-effective, priority must be placed on critical or frequently failing components, mostly ignoring the rest until problems become apparent. Additionally, the equipment pulled for service does not always require attention.

By instead instituting preemptive and condition-based approaches to maintenance, service can be performed when and where reasonable to keep ahead of most failures. With the right data analysis and insight generation software in place, manufacturers can effectively manage long lists of assets, prioritizing maintenance based on current and forecasted conditions. This helps direct the most efficient timelines for maintenance personnel to take equipment offline for service.

Improving asset management with live connectivity to data and scaling tools

Implementing condition-based maintenance begins by establishing connections to all sources where the relevant data resides. This can be done using cloud-based, advanced analytics applications, with the ability to connect natively to a variety of data sources. The list includes process historians for sensor data, cloud data stores for equipment design information, SQL-based databases for historical maintenance data and others. These connections read data directly from the source without modifying, duplicating or manipulating the source information in any way. These applications also provide the ability to read hierarchy information across groups of assets, supporting scalability. Where hierarchies do not already exist, point-and-click tools empower end-users to quickly create these on the fly, whether for a small use case, or to build out the entire facility, region or enterprise.

Once live connections are established to data sources, an advanced analytics application aligns time stamps and applies basic interpolation, eliminating time-consuming ETL efforts. These connections provide a jumpstart for subject matter experts (SMEs) — such as process and reliability engineers — to apply analytics to their data through easy-to-use, point-and-click, low code tools, in addition to other time-series specific functions. Built-in hierarchy capabilities enable SMEs to apply analytics to other assets with ease, via asset swapping and scaled out asset treemap views (Figure 1).

Various visualization tools can be used to develop comprehensive displays — including trends, tables and histograms — and publish them in dashboards to democratize analytics, empowering cross-functional teams at a facility to make quicker and better-informed decisions. These dashboards can provide static reports or update live, alerting teams of deteriorating asset performance in near-real time. This collaboration and knowledge sharing is made easy in cloud-based applications, accessed through a web browser via URL, with the appropriate access permissions.

Value at scale using advanced analytics

Digital leaders throughout the process industries are embracing new self-service analytics technologies to take full advantage of their data investments, helping solve increasingly complex asset management use cases.

Use case #1: Bearing failure prediction

Rotating machinery presents challenges when establishing proper maintenance and servicing schedules. Specifically, bearings can fail on arbitrary rhythms, limiting the usefulness of scheduled PM.

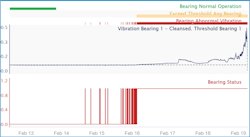

In one scenario, a bearing failed suddenly, causing damage to the compressor, and this created significant downtime. Leveraging Seeq, an advanced analytics application, the engineering team performed a root cause analysis, noting vibration sensors provided reasonable indicators for potential issues. Based on this knowledge, they built an empirical model to identify when vibration sensors might deviate from statistically “normal operation,” comparing actual operation to a training data set.

The model indicated a point where maintenance was required, just a few days prior to the failure. The team then consulted their resident data scientist to evaluate whether applying a machine learning model could offer even more insight on the bearing’s condition.

Using normal operation data in addition to the failure data as training sets, the data scientist analyzed the pre-contextualized data in a Python environment and applied an anomaly detection algorithm, which generated a bearing status signal, providing an additional two days of notice on bearing degradation ahead of failure.

The data used to perform the root cause analysis and create subsequent bearing prediction models existed in a process data historian prior, and it was correlated with additional vendor testing data stored in a cloud SQL table. Both sets were connected to the advanced analytics application, then a hierarchy was created, enabling calculations to be quickly propagated from one bearing to others on similar compressors.

The final trend was placed in a dashboard, and scaled out to similar assets throughout the facility. Now, the maintenance team is notified of abnormal operation in their compressor fleet an estimated average of one week before a failure would otherwise occur (Figure 2).

This early warning enables proactive service of bearings to extend run life, and it provides time to order parts and reallocate resources.

Use case #2: Pump health monitoring

Pump reliability has become increasingly important, leading to an industry focus on better methods for measuring pump health in near-real time. The state of overall pump health is informed by identifying signs of degradation and aggregating these into a single predictive pump health metric.

A power and utilities provider with critical pump assets implemented a pump performance monitoring solution to reduce failures. To access the necessary data for analysis, Seeq was deployed with live connections to a process historian for operating data, and to SQL tables for historical work orders. Design data was aggregated via subject matter expertise and design data sheets.

Once the data became available centrally in the advanced analytics application, SMEs cleansed the data by removing outliers, noise and dropouts. First principles calculations and conditions were then applied, creating various operating mode indicators derived from pump curve and data sheet specifications.

Next, pump health indicators were rolled up into a single health score metric, providing an overall view of daily pump performance. The utility now uses these health scores to drive proactive maintenance intervention.

This analysis was scaled out to multiple pumps throughout the enterprise by creating hierarchies via a point-and-click asset grouping tool in Seeq. Live-updating dashboards were assembled and published to display the performance of the pump fleet, with daily review by plant staff. This activity helps the team establish maintenance priorities, and it provides greater confidence in pump reliability, decreasing risks to operation and the business.

Use case #3: Valve erosion prediction

Valves can be found throughout multitudes of facilities, playing vital roles in process control. Advanced analytics solutions can provide low-effort and high-return opportunities to monitor the condition of these critical assets and reduce unexpected failures.

An oil and gas producer operating more than 50 well pads — each with a gathering system containing a critical flow control valve — was experiencing frequent valve failures. Each failure occurrence rendered the pad inoperable for days, until repairs could be made. These failures were typically caused by sand erosion, and the producer had no methodology in place to identify early warning signs aside from manual inspections requiring shutdown, which became cost prohibitive as the asset base grew.

By deploying Seeq to monitor process conditions, the producer was empowered to predict erosion progression, and approximate the time to valve failure. To establish this analysis, SMEs leveraged real-time and historical process data to calculate a metric indicating progressive erosion in the valve seat, thereby establishing an indicator to predict future failures. The team used first principles to produce this metric, which was leveraged as the basis for a predictive model to approximate time to failure (Figure 4).

This analysis was scaled to all well pads by leveraging historian hierarchies imported into the analytics application through its native connectors. The ability to forecast failures enabled the maintenance team to prioritize service, significantly reducing operational downtime and OPEX.

Data-driven advantages

Equipment maintenance is critical to steady plant operation, which correlates directly with profitability. Unlocking the immense value of process data was a challenge in the past, but today’s advanced analytics applications empower process manufacturers to extract insights from data, providing decision-making information that can be scaled enterprise-wide.

This is a vital advantage for growth. When used effectively, these insights can be used for predictive modelling and a host of condition-driven actions, improving operational and asset management efficiency.

Kjell Raemdonck is a customer success manager at Seeq, where he helps customers discover new ways to interact with their process data and drive continuous improvement using advanced analytics software. Prior to his role at Seeq, he served in engineering positions at Devon Energy and Canadian Natural Resources Limited. He holds a BASc in Chemical Process Engineering from the University of British Columbia.

Seeq Corporation

About the Author

Kjell Raemdonck

Customer success manager at Seeq

Kjell Raemdonck is a customer success manager at Seeq, where he helps customers discover new ways to interact with their process data and drive continuous improvement using advanced analytics software. Prior to his role at Seeq, he served in engineering positions at Devon Energy and Canadian Natural Resources Limited. He holds a BASc in Chemical Process Engineering from the University of British Columbia.