It’s time to break up by embracing the software-defined control journey

Today’s process manufacturing facilities are operating in a complex, highly competitive environment. Constantly shifting marketplace needs are intersecting with supply chain complications—along with new demands for sustainability, availability and productivity — creating an inflection point. These and other issues require operations teams to operate at peak efficiency so they can maintain competitive advantage.

One of the key elements for maximizing operational efficiency is driving increased flexibility to unlock agile manufacturing. Today, top performing operations teams can support that drive by embracing a boundless automation vision to integrate operations through a next-generation automation system. This type of system can be used to seamlessly move data from the intelligent field through the edge and into the cloud for peak optimization across the enterprise. A boundless automation architecture breaks down silos and democratizes data by creating a modern environment where it is easier and more efficient to move and manipulate data, as well as execute real-time control.

At the heart of this paradigm shift is a decoupling journey that started decades ago but is still shaping the future of process manufacturing. Understanding that journey is critical to building a successful foundation for a boundless automation future — a future that will unlock the flexibility necessary to drive competitive advantage in the years to come. Many of the new technologies enabling this future are already here. And while some of the most transformative technologies are just over the horizon, operations teams should take steps today to be ready for those solutions when they arrive.

Decoupling I/O and the controller

A key step on the road to more flexible operations occurred when automation suppliers decoupled I/O from the controller. In early automation systems, I/O required termination on marshalling cabinets and I/O cards attached to the controller. Project teams had to engineer each of their signal connections to create complex cabinet design and wiring diagrams before any work could take place. Typically, this meant that teams were locked into design once decisions were made. Any significant changes later in the project were extremely costly and time consuming because they caused a chain reaction of required modifications to design, equipment and workloads.

Using electronic marshalling technology, project teams began to break free of the complexities inherent to traditional designs by eliminating the need for marshalling I/O via decoupling I/O from the controller. Today, teams use flexible modules to define I/O on an as-needed basis, eliminating the issues associated with late-stage changes to project design. As needs change — whether during project implementation or later in operations — teams can make the process and equipment changes they need to stay flexible, without turning every change into a project. Each controller now has access to any I/O in the plant, regardless of where each is physically connected.

Today, the decoupling of I/O from the controller is expanding even further with Ethernet advanced physical layer (APL). APL provides intrinsically safe Ethernet connectivity for field devices using the two-wire cabling that plants already have in place. Using the power of Ethernet, APL can potentially be used to decouple I/O from the distributed control system (DCS) or programmable logic controller (PLC).

Driven by Ethernet equivalents of today’s protocols — HART-IP, PROFINET, Ethernet IP and more — the I/O system will become smarter, and the use of APL will make it easier to install and maintain these networks. These new I/O networks will support high-bandwidth technologies to bring many more devices, and far more data types, into the control system, and to cloud analytics systems.

Decoupling software

The potential increase in data coming through the DCS due to APL is already driving a change in how data is handled in operational technology (OT) networks, and a key element of that change is a shift toward software-defined control. Today, control hardware is designed around robustness, with hardened technology configured to withstand temperature extremes, high vibration, dirty environments and physical damage.

However, only 20%-25% of controller installations require such robust design, as most controllers are running inside clean cabinets in climate-controlled environments. By decoupling control software from the hardware, operations teams can make better decisions about the hardware they want to use for their unique environment.

The first step toward full software-defined control is a move away from a controller hardware-centric vision of control design. Modern technologies empower teams to select the software functionality they need independently of the hardware required for their plant’s specific conditions. As a result, operations teams can scale their process control software according to their needs, without needing to replace their hardware.

Teams can even start moving control software off hardened systems and onto servers supporting real-time workloads. Today’s high-performance server solutions provide additional options for flexibility in hardware, while retaining the determinism, accuracy and robustness of control necessary for real-time operations.

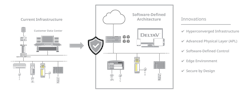

With the evolution of hypervisors, hyperconverged infrastructure, containers and workload orchestration, automation suppliers will have the tools they need to host deterministic, ultra-low latency, real-time control on appropriate hyperconverged platforms. OT teams will gain even more ability to make the best decisions about the hardware and software they want to use for their environment by mixing real-time and non-real-time workloads. This will allow these systems to benefit from virtualization, cloud connectivity, increased data mobility, lower IT infrastructure and maintenance costs, and more (Figure 1).

Decoupling data

Current available technologies for decoupling control hardware and software are building a foundation for the boundless automation future, which is just around the corner. A key element of this data democratization will be moving the ever-increasing amount of data efficiently from the field into critical analytics and business applications in the cloud.

Today, OT teams often struggle with this task because moving data through controllers can be a complex and costly endeavor, so achieving a boundless automation goal will require decoupling data from the controller.

To address this and related issues, teams will leverage seamless data highways and data streaming technologies to move, aggregate and contextualize data, relieving stress on the control system and making it easy to drive data directly to the applications that need it. Ultimately, the control function will be just another application — albeit a critical and highly stable one — in the boundless automation ecosystem.

Once data can freely move through the control system, OT teams will need an edge environment enabling them to securely connect with that data and make it ready for use on-premises or in the cloud. Edge environments offer a simplified architecture, using an encrypted outbound flow with optional data diodes to ensure secure one-way communication between control architecture and cloud systems. This one-way communication empowers teams to contextualize data, while simultaneously standardizing it and then collecting it in an easily accessible repository, without granting access to control and plant operation functionality.

Once collected in such a repository, contextualized data is easily available via widely used protocols such as OPC UA, REST API and MQTT, providing increased usability for the organization’s appropriate cross-functional teams.

More flexibility in a decoupled world

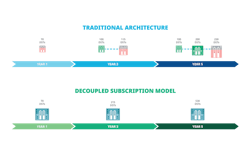

As control is decoupled from hardware, and data is decoupled from control, teams will gain access to even more flexible architectures. Instead of committing to a static architecture well before operations begin, OT teams will have access to new subscription models, allowing them to shift their operations to meet a changing plant environment or evolving market need.

With a subscription model, teams will acquire only the licenses they need, and they will retain the flexibility and autonomy to scale up or down as circumstances require. Such a strategy not only reduces the need to pay for software the facility does not use, it also reduces the need to over-engineer projects as the operations team is no longer locked into the decisions the project team made in early design stages.

Using this type of architecture, some of the costliest decisions can be shifted from capital expenditures to operational expenditures and allocated over time, making it easier to design projects to meet changing needs (Figure 2).

Driving toward a boundless automation future

Process control has come a long way from DCS technology built on I/O cards connected to a backplane, which was physically tied to a controller to channel all operations data. The journey of decoupling has reached full speed.

Today, as teams decouple I/O from the controller using electronic marshalling technologies, APL infrastructure and Ethernet protocols, they are dramatically expanding their data capabilities. This new architecture enables them to democratize that data, breaking down silos and moving data quickly and seamlessly across the enterprise. OT teams are accomplishing this paradigm shift by taking the next step — decoupling software functionality from hardware functionality. Today, that journey is happening inside the controller, leaning heavily on edge environments to move and organize data.

Soon, however, those same teams will decouple software execution from controller hardware entirely, running it instead on the appropriate hyperconverged platforms, which can also run the software they need to make the most of their data. Simultaneously, they will decouple data from control to make it easier to move that critical data to essential cloud software systems. When all those steps are accomplished, the future, in addition to the automation, will be boundless.

Claudio Fayad serves as vice president of technology of Emerson’s Process Systems and Solutions business. Prior to this role, Claudio held a variety of positions within Emerson, from sales and marketing director to vice president of software.

Emerson

About the Author

Claudio Fayad

Vice president of technology of Emerson’s Process Systems and Solutions business

Claudio Fayad serves as vice president of technology of Emerson’s Process Systems and Solutions business. Prior to this role, Claudio held a variety of positions within Emerson from sales and marketing director to vice president of software. He joined Emerson as director of Process Systems and Solutions in May of 2006 based in Brazil. Before Emerson, he started his career with Smar in 1995 as systems engineer and progressed through various roles of sales. Claudio served as the global engineering sales director in his last role with Smar. Claudio holds a bachelor’s degree in engineering from the Universidade Estadual de Campinas and a master’s degree in business administration from Northwestern University.